As mobile and IoT deployments play an increasing role in enterprise networks, organizations are looking to more appropriate architecture—such as edge computing—to relieve their security and device management burdens.

Edge computing is still in its relative infancy, and the platforms and services needed to orchestrate edge deployments are evolving themselves. In this article, we’ll be looking at some of the issues and available options.

Edge Computing on the Rise

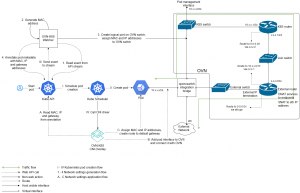

Today’s enterprise networks will typically have a dispersed and globalized footprint with mobile and remote workers, various sites, and a diversity of hardware and access points. Rather than a centralized model dependent on a single data warehouse, network management for this new breed of corporate campus must itself adopt a more dispersed management strategy.

Edge computing![]() allows for this through the setting up of localized management nodes in the vicinity of the group of devices and other network resources that operate in that location. It typically employs distributed, open architecture that features decentralized processing power and allows for the processing of information by individual network devices or local servers.

allows for this through the setting up of localized management nodes in the vicinity of the group of devices and other network resources that operate in that location. It typically employs distributed, open architecture that features decentralized processing power and allows for the processing of information by individual network devices or local servers.

Having removed the overhead associated with needing to transmit all transactions to a central data center or cloud, edge computing allows for the acceleration of data streams and the real-time processing of information with minimal or near-zero latency. For critical applications such as finance industry transactions, a latency of even milliseconds is unacceptable, and edge computing deployments can ensure data processing rates are fast enough to eliminate this risk.

(Image source: Network World![]() )

)

By allowing large amounts of data to be processed near their source, edge computing also reduces internet bandwidth usage.

Mobile Edge Computing (MEC) and IoT

Now used as a generic term to include almost all edge computing architecture, mobile edge computing (or MEC), supports computational, analytics, and storage capacity at the edge of a network. Its growth has been accelerated due to the increased adoption of Internet of Things or IoT technologies.

The IoT ecosystem of millions of interconnected and online devices and sensors has generated a need for faster data processing—and the requirement for IoT elements to connect to edge, centralized, and cloud-based data centers. Components may typically be deployed in mission-critical circumstances (e.g., in the healthcare and manufacturing sectors), with an attendant need for low or no-latency data operations.

MEC applications can typically guarantee a transfer latency of milliseconds or less. They can also provide real-time data analysis, which greatly reduces the amount and frequency of information transfers required to any distant centralized location. Sensitive data can also be confined to local zones and not exposed to the internet, enhancing corporate security.

As with any IT environment, the devil is in the details. Designing an application or device-specific system for edge computing requires a complex combination of hardware, software, and networking. Early adoption of MEC has been hampered by the cost and complexity of deploying enterprise IoT systems.

Over a dozen standards organizations (including ETSI, OpenFog, EdgeX, and OpenStack StarlingX) are involved with setting up the architecture for mobile edge computing. So MEC architectures have to be designed with flexibility and adaptability to allow for this uncertainty, and for changing business requirements.

A number of tools and services are available to assist in MEC deployment, which we shall now discuss.

Kubernetes

(Image source: Kubernetes.io![]() )

)

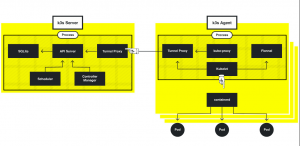

Designed for managing containerized workloads and services, Kubernetes is a portable and extensible open-source platform for the configuration and automation of networked systems. Its name originates from the Greek “Kubernetes”—meaning “helmsman” or “pilot”—and is the word from which “governor” and “cybernetic” were derived. K8s is an abbreviation commonly used for the platform in the trade literature, which is derived by replacing the 8 letters “ubernete” with “8”.

The Kubernetes project was pioneered at Google, which released it as open source in 2014. It is simultaneously a container platform, a micro-services platform, and a portable cloud platform. The Kubernetes management environment![]() is based on containers, and it orchestrates computing, networking, and storage infrastructure on behalf of user workloads.

is based on containers, and it orchestrates computing, networking, and storage infrastructure on behalf of user workloads.

The Kubernetes control plane is built on the same application programming interfaces (APIs) that are available to developers and users. Users can write their own controllers with their own APIs, which can be targeted by a general-purpose command-line tool. This API functionality has enabled a number of other systems to build on top of the Kubernetes foundation stone, which provides much of the simplicity of Platform as a Service (PaaS).

Rancher K3s

(Image source: K3s.io![]() )

)

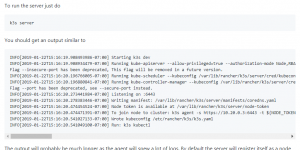

K3s is an open source project by Rancher Labs, which strips Kubernetes down to the bare essentials for edge computing and other use cases where resources might be constrained. The developer Rancher Labs previously created the Rancher Kubernetes management system and the RancherOS Linux distribution, which is container-based.

K3s is a play on the Kubernetes abbreviation of K8s, and emphasizes the “bare bones” approach of the new platform, in comparison to its parent suite. Deploying a number of techniques, K3s squeezes a Kubernetes distribution into 512 MB of RAM, using a single 40 MB binary. Rarely used Kubernetes plug-ins are omitted from the installation, and K3s consolidates various functions of a Kubernetes distribution into a single process.

Resource-intensive components typically found in the Kubernetes stack are also replaced by less demanding substitutes. For example, instead of a full instance of Docker as the runtime engine for containers, K3s uses Docker’s core container runtime, Containerd. Many of the elements normally included with Docker are thereby eliminated.

(Image source: GitHub![]() )

)

K3s![]() is optimized for the edge computing and standalone device markets. The x86-64, ARM64, and ARMv7 platform architectures are all supported. The project is currently being offered with no official support by Rancher Labs, but the company believes it will begin to offer official support for K3s by the third quarter of 2019.

is optimized for the edge computing and standalone device markets. The x86-64, ARM64, and ARMv7 platform architectures are all supported. The project is currently being offered with no official support by Rancher Labs, but the company believes it will begin to offer official support for K3s by the third quarter of 2019.

Enterprise users of edge computing for IoT and mobile deployments can use this and other resources to manage their system architecture.