With cooling requirements accounting for some 40% of the power consumed by an average data center, optimizing the strategies and technologies used for temperature control makes sound economic and operational sense. But many data centers still rely on outdated methodologies and management techniques, for environmental control.

With cooling requirements accounting for some 40% of the power consumed by an average data center, optimizing the strategies and technologies used for temperature control makes sound economic and operational sense. But many data centers still rely on outdated methodologies and management techniques, for environmental control.

The materials, technology, and strategies for data center cooling continue to evolve. Here are some of the prevailing trends, and some best practices.

Know The Purpose Of Cooling

Sounds like a no-brainer, but having a clear idea at the outset of why cooling is required and where it needs to apply will have a positive impact both on the planning and implementation of your cooling strategy, and on your justification to the financial powers that be as to why it needs to be figured into their budget.

An effective cooling strategy for the data center as a whole increases operational efficiency, while also relieving the pressure on the cooling systems incorporated into your hardware – effectively extending the life of your equipment. This also frees up power for your user-level IT equipment, increasing its uptime.

Decide On An Acceptable Temperature Range

The American Society of Heating, Refrigeration and Air conditioning Engineers (ASHRAE) created a data center classification system in 2011, with a range of recommended operating temperatures, to match. For general purposes, this stands at 81 F (27 C), but maximum allowable temperatures can reach 113 F (45 C). Note that this is actually the temperature at which increased equipment failure rates may occur – so it’s in your best interests to maintain ambient temperatures well below this level.

Deciding on your optimum level is a bit of a balancing act. On the one hand, a data center which is held at a somewhat higher temperature will require less cooling (and therefore less energy consumption and cooling equipment). On the other, running your hardware at a higher temperature runs the risk of glitches and outright failures.

Rationalize Your CRAC Units

Computer room air conditioning (CRAC) units may constitute a major portion of a data center’s expenditure on cooling equipment, so there are savings to be had, in finding ways to reduce the number of units required.

Maintaining a slightly higher ambient temperature level is one way to achieve this: If you reduce the amount of cooling required, you can cut down on the use of air conditioning (saving energy and maintenance costs).

Using variable-speed CRAC units rather than fixed-speed ones will enable you to run your cooling equipment only at the speed required to maintain your desired temperature. Alternatively, standard fixed-speed units may be run at full load on a periodic basis – enough to cool the environment to well below your target temperature, at which point they’re switched off. Once the temperature builds up again to a certain level, the units may be switched on again.

Optimize Your Hot & Cold Aisles

Within data centers, hot and cold aisle containment strategies![]() remain the primary methods used by leading companies to reduce energy consumption and optimize equipment performance. However, there are factors to take into account in ensuring that these approaches yield optimal results.

remain the primary methods used by leading companies to reduce energy consumption and optimize equipment performance. However, there are factors to take into account in ensuring that these approaches yield optimal results.

Hot-aisle containment (HAC) and cold-aisle containment (CAC) systems work by separating the cold supply air-flow from the hot equipment exhaust air. Care must be taken to minimize the extent to which hot and cold air mix, so that a uniform and controllable supply temperature can be maintained, and distributed to the intake of your IT equipment. The air returned to the AC coil will then be warm and dry.

The architecture of the room and the layout of your IT equipment will determine the design of its containment system, and the positioning of the hot or cold aisles. The source of your supply and return air flows will also have an influence on this design, which must be optimized to reduce the mixing of those air-streams.

Consider The Kyoto Wheel

Consisting of a wound, corrugated metal wheel approximately 3 meters (10 feet) in diameter, the Kyoto Wheel rotates slowly through a space consisting of twin compartments, taking hot air from the data center in at one space and venting it through the other on a stream of cold air, as the metal in the wheel absorbs its heat. Cooled air from the process may be fed back into the data center for cooling the equipment.

With a Kyoto Wheel arrangement, the air flow in the data center forms a closed loop, and the small volume of air transferred as the wheel rotates minimizes the transfer or moisture and particulates from one compartment to the other. Low-speed and low-maintenance fans and motors are used, minimizing energy consumption (many systems run on solar power or batteries), and extending the life of a system to 25 years or more.

Water Cooling in Warmer Climates?

In areas with warm climates, direct heat from the environment can be directed against a system of wet filters to evaporate the water in them, and thus cool the air being pulled through the filters. These adiabatic or water-cooled systems also consist of twin chambers, with the filters providing a barrier between the outside air and the interior of the data center.

To avoid contamination by particulates, these systems require that the filters be changed at regular intervals. The moisture content of the supply air also has to be regulated, to avoid the possibility of condensation on your IT equipment.

Immersive Cooling For High-end Operations

Companies operating in warm climate areas and running large volumes of equipment at high thermal profiles may consider direct water or liquid cooling as an option. As an example, for its Aquasar and Liebniz SuperMUC supercomputer systems, IBM has developed a negative pressure system to suck water around its facility instead of pumping it, in a modular framework where repairs or maintenance to one section won’t impact the operation of the others. Microsoft has gone a stage further, and dropped a self-contained data center into the ocean.

Plan For Redundancy

Whichever system you use, measures for failover and redundancy need to figure into your plans. At a direct matching level, this may involve having stand-by units available of the same kind, to take over if the main system goes down. Alternatively, a secondary cooling system employing a different technology may be put in place.

Monitor With DCIM

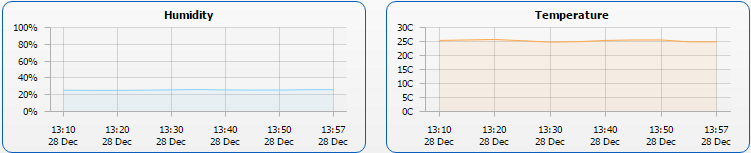

You’ll also need to monitor your data center temperatures, to ensure that optimal temperature ranges and performance targets are being met and maintained.

Here, the thermal sensors and infrared detectors of a data center infrastructure management![]() (DCIM) system come into play. These tools can build up a thermal map of your data center, identifying hot spots and potential problem areas. Coupled with some computational fluid dynamics

(DCIM) system come into play. These tools can build up a thermal map of your data center, identifying hot spots and potential problem areas. Coupled with some computational fluid dynamics![]() analysis, the system can also generate alternate scenarios to predict how new cooling flows and different inlet temperatures will affect the workings of different systems.

analysis, the system can also generate alternate scenarios to predict how new cooling flows and different inlet temperatures will affect the workings of different systems.